Ceci n’est pas une peinture — making art with AI

Earlier this year the jury of the Colorado State Fair annual art competition awarded Jason Allen the first place for his “Theatre d’Opera Spatial” in the digital art category. Little did they know at the time about how Allen created his piece. The artist highlighted afterwards in an interview with the New York Times that he never deceived anyone. He did it using Midjourney: An artificial intelligence program that generates surreal graphics from text prompts. This award set a precedent: for the first time in art history someone used generative art for competitive reasons — a pivotal moment in the history of art, and AI.

The tool Allen used — Midjourney) — is just one of several AI image-generation tools that has become available to the public this year (see also: DALL-E 2, Stable Diffusion, Craiyon, AICAN). This type of model automatically generates images never seen before by leveraging extensive training materials and a text prompt (e.g., “cyberpunk last supper by Leonardo da Vinci”).

One of the first things any deep-generative artist will tell you about AI image generation is how easy it is to generate art: You only need to describe an image using natural language, as you would when talking to another person. Type the prompt into the AI image generator, and in a matter of seconds, an image appears in front of you.

Because of its simplicity, it’s tempting to say that there is no human creativity involved. Through its training datasets, the image-producing models become independent artist/thinkers: Once they have received their input, they evolve, without further instructions. However, models themselves have no will, agency, nor memory: The same prompt can generate different results each time.

The human-editorial side is inevitable and becomes the core of the artistic process: The artist needs to consider the training material, fine-tuning, and planning of the training time — which according to Jason Allen may take up weeks. Moreover, the software serves-up hundreds of outputs in a matter of seconds, which ultimately a human needs to cherry-pick based on their preferences. Often the result is too undefined and raw, and the artist must edit it, which is why many people consider generative modelling a craft, i.e., even an art.

Generative art and its siblings

But what is generative art? Generative art is a digital art style in which artists program machines to generate patterns following algorithmic rigid instructions, often with some element of chance. They can take up the form of mathematical symmetries, or more realistic and complex portraits.

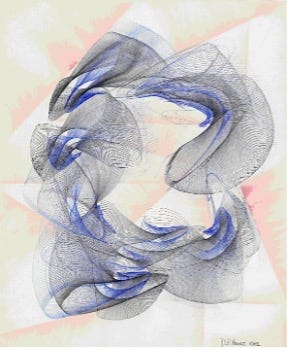

While new generative art tools have recently been getting a lot of attention, the idea of programming machines to produce art on canvas is really nothing new. Its roots even precede the widespread availability of personal computers. In the 60s the artist and professor in Philosophy Desmond Paul Henry (1921–2004) built multiple drawing machines based around the components of analogue bomb-sight computers employed during WW2. These computers, originally used to calculate the accurate release trajectory of bombs onto their target, were adapted to produce drawings by controlling the relative motion of a physical canvas and a suspended drawing implement — a biro, in the first instantiation of the machine.

Henry combined these computers with other components to create electronically operated drawing machines which relied mainly on “mechanics of chance”. This produced abstract, curvilinear, repetitive line drawings, resembling the beauty described in mathematical formulas. Crucially, Henry could intervene at any given time to deviate and modify the trajectories, thus producing a unique, unrepeatable piece of art.

The spontaneous interactive element of Henry’s machines anticipated by some 50 years the core artistic process of contemporary generative art: The text prompt. The text prompt interaction in modern generative models can feel like magic, but it isn’t.

To understand how a (deep) generative model works, and why it is so important from an AI perspective, it is useful to compare it to its counterpart — discriminative modelling. Let’s look at an example.

Suppose we have a dataset of paintings, some painted by Van Gogh, and some others painted by other artists (e.g., Klimt, Cézanne). With enough data, we could train a discriminative model to predict if any given new painting was Van Gogh’s, or something else. Our model would learn that certain features (e.g., colours, shapes, textures, etc) are more likely to indicate that a given painting is by the Dutch artist, and for paintings with similar features, the model would upweight its prediction accordingly.

Over time, the model ultimately learns how to discriminate between these two groups (Van Gogh vs. other) and outputs the probability that a new observation belongs to the Van Gogh’s category. To do so, however, discriminative modelling requires that each observation in training must have a label, i.e., all Van Gogh’s paintings are labelled as 1, and non-Van Gogh paintings as 0.

Generative models instead don’t need a labelled dataset¹: they output sets of pixels, I.e. other images, and are trained to minimize the difference between the images they produce and the images they are trained on.

Key points:

· Discriminative modeling estimates p(y|x) — the probability of a label y given an observation x. It requires a labelled dataset.

· Generative modeling estimates p(x) — the probability of observing x. It doesn’t necessarily require a labelled dataset.

In other words, the key difference between discriminative and generative modelling is that while the former attempts to estimate the probability that an observation x (pixel) belongs to the learned category y (Van Gogh), the latter instead attempts to estimate the probability of seeing the observation x (pixel) at all.

Once trained, generative models can be used to produce completely novel images that share features with the training set. What the model learns is a highly sophisticated method for interpolating images: Not magic, but impressive, nonetheless.

Good artists copy, great artists steal

Since the AI is trained on images pulled from the internet, it learns from a store of pictures that people have chosen to share. As a consequence, the blind use of these models often reveals how deeply social bias is baked into our data sets. For example, OpenAI has found that prompting the word “Nurse” will always show a woman, while the word “CEO” always a man. Bias doesn’t seem to be limited to gender, but encompasses race as well. One solution to this is to provide a more socially and ethnically diverse training dataset that would comprehend different ethnicities and gender descriptions.

More concerning on the long term, however, is the potential of these tools to create misinformation. In a test run at Art Basel in 2016— an annual fair showcasing contemporary art — the authors of AICAN (Artificial Intelligence Creative Adversarial Network) showed that people were very often unable to tell the difference between AICAN generated images and artworks produced by a human artist (see also Tidio’s recent test confirming this trend). This suggests that the share of “visual literacy” in the public is not very high and may pose a risk in a society that hasn’t integrated more sophisticated tools to tell apart humans- vs AI- generated images in our daily life.

Aside from these ethical concerns, there is also the question of plagiarism. The training dataset is obtained by web scraping millions of images that are necessarily made by someone else. This suggests a form of plagiarism which affects artists who upload their works to the internet (perhaps for self-promotion) and that may be unaware of helping an AI to become their competitors.

Is it society doomed?

Allen’s “Theatre d’Opera Spatial” was a test of how the world would view AI-generated art: The award got mixed responses. Some people accused him of cheating because he didn’t make the work, others (like Allen himself) took pride in seeing an AI-generated piece beat the competition.

When a new technology gains traction, it always fuels the public debate. In the case of generative models, the enthusiasts foresee the liberation of human creativity from the burden of technical expertise, the worried fear the end of traditional image production as an art form.

Many people compare these reactions to those that followed the invention of photography in 1822, which many artists at that time saw as an insult to human creativity and artistry. It ended up creating a revolution, not a murder, of the visual arts. Most people now acknowledge that ultimately the human, not the device, is responsible for the image.

Ultimately, making generative art the new photography is up to us. It will depend on how we choose to answer important questions like: Who does the technology belong to? Who can use it? What is the allowed use, and do deep learning models expand or compress our freedom?

It is us, not the technology, who choose the direction.

1: though it can also be applied to a labelled dataset in case we wish to generate observations from each distinct class