Finding the Best Training Data for Your Machine Learner with Active Learning

Computers perform better than humans in arithmetic and chess but if there is one task in which humans are superior it is image processing. But for how long? In 2015 there was a breakthrough in computing science: the ResNet system won the international image recognition competition ImageNet Challenge with a score of 96.5%. People achieve 95% on that task. For the first time, a computer was better than humans in identifying objects in images.

The winning system used machine learning to recognize objects in images. The advantage of using machine learning is that you do not need to specify a task in great detail. Instead you provide the computer with examples of input and required output. If the task is recognizing the content of images, the input consists of images while the associated outputs are labels specifying the contents of the images. The computer then uses machine learning to learn how to assign labels to any image based on the available examples.

If we want the machine learner to perform well, we need to supply it with many good training examples. But what are the best examples? An image which is very similar to one of the known examples, will probably not contribute much to the learning process. But an image which is quite different, might be a good new training example.

It would be nice if there was an automatic method for determining of which training examples a machine learner would benefit most. Then we can restrict human labeling efforts to these data and quickly improve the machine learning. Such a method exists: it is called active learning.

Active learning

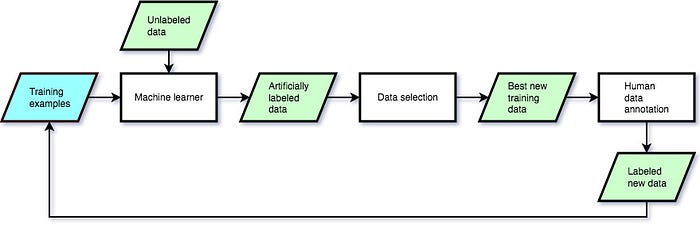

Active learning is a semi-automatic method for expanding the training set of a machine learner. For example, if we want to learn the difference between images of cats and images of dogs, we can train a machine learner using images which are labelled either “cat” or “dog”. We can expand this set of training examples with active learning by performing the following six steps:

- train the machine learner with all available labelled training examples

- use the learner to classify many unlabelled images

- select the images which could be most instructive for the learner

- ask a human to assign correct labels to these images

- add the newly labelled images to the training set and retrain

- repeat steps 2–5 until the performance reaches an acceptable level

The most challenging part of this active learning process is step 3. There are two ways of performing this step. First, we can require the machine learner to specify for each predicted label how confident it is about the prediction, and select the items with the lowest confidence (uncertainty sampling). Second, we can use several competing machine learners to predict labels and select the items on which they disagree (query-by-committee, more detailed information can be found in a study by Burr Settles).

Example: automatic spelling correction

An example of an application of active learning is the study of Michelle Banko and Eric Brill on automatic spelling correction. They started with a training text of one million words and achieved a score of 96% on their task. By adding to the training data randomly selected texts with a total length of six million words, they managed to improve the score to 97%. But next, they showed that if the extra texts of six million words were selected with active learning (query-by-committee), the score on their task went up to almost 99%, a significant reduction of the error.

When active learning does not help

Several studies have shown that active learning can be applied successfully to improve the performance of machine learning. Increasing the number of training examples will often improve machine learners. The advantage of active learning is that it picks the new training examples in a smart way so that the machine learner can improve faster than when the training material was chosen randomly. However, active learning does not always perform better than random selection of new training examples. Most importantly, Sanjoy Dasgupta has shown that active learning will only work better than random selection if the initial size of training examples is large enough so that the initial machine learner can make reliable predictions. The required training size depends on the problem and is usually hard to estimate.

Active learning at the Netherlands eScience Center

Since lack of training data is a common problem in machine learning research, there are several projects of the Netherlands Science Center that are interested in applying active learning. The method was already used by myself in the project Automated Analysis of Online Behaviour on Social Media (2017) for obtaining more political tweets labelled with the intention of the sender. The topic active learning was also discussed in the meetings of the Machine Learning group of the eScience Center. Do you have any active learning tips and tricks for our group? Please let us know in the comments below!